By Cliff Robinson | ServeTheHome

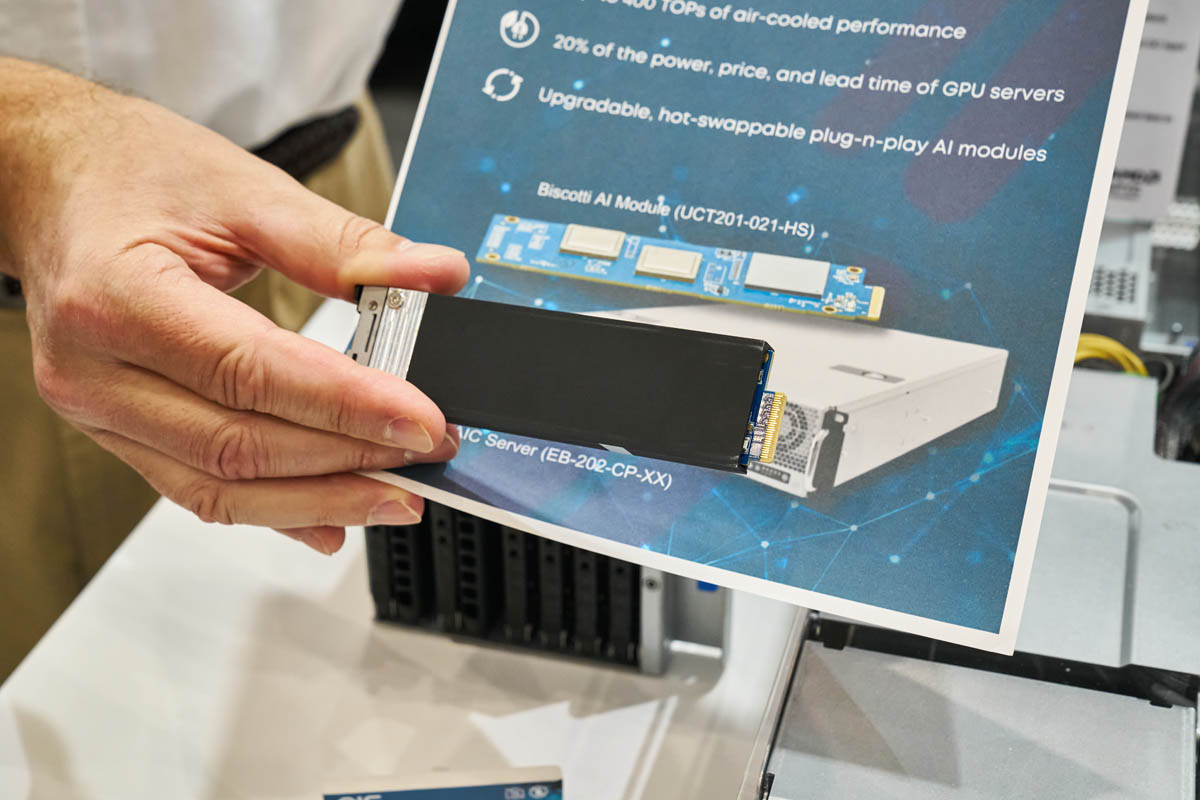

At Computex 2024, the team saw a dual Hailo-8 GPU in an E1.S form factor in the AIC booth. The Unigen Biscotti UCT201-021-HS is a 9.5mm E1.S module that can add low-power AI inference acceleration to servers. If you were wondering, we asked and -HS is for the 9.5mm heastink and -NC is the no heatsink version. Still, it is a cool module, and being used in a cool manner here.

Unigen Biscotti E1.S At the AIC Booth Computex 2024

At the AIC booth, we saw a familiar server with a new twist. Instead of just having replaceable front storage, it now has E1.S AI acceleration capabilities with the Unigen Biscotti.

Here is the module. Behind it, you can see the bare card on the paper. The card houses a 12-lane PCIe switch and two Hailo-8 AI accelerators onboard.

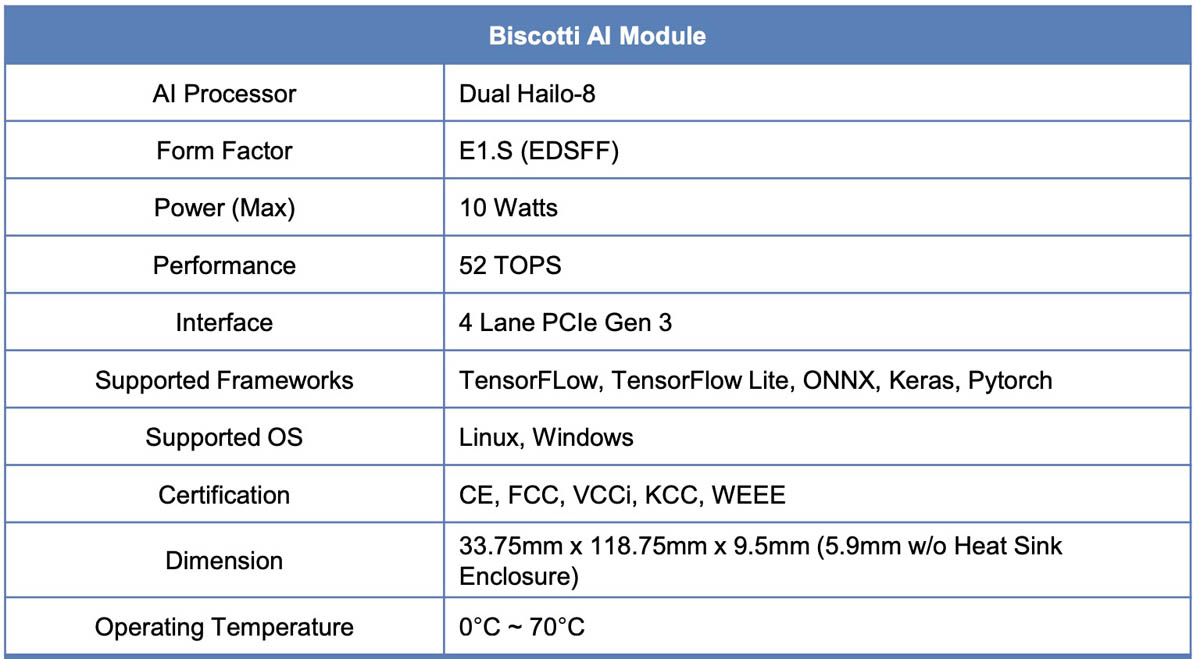

Here are the specs of each module. One of the more interesting specs is that even with the PCIe switch, these are still 10W modules making them relatively easy for servers to cool like SSDs.

Years ago, when we did our E1 and E3 EDSFF to Take Over from M.2 and 2.5 in SSDs piece, we noted that putting accelerators into EDSFF would happen as well. This is a good example of that.

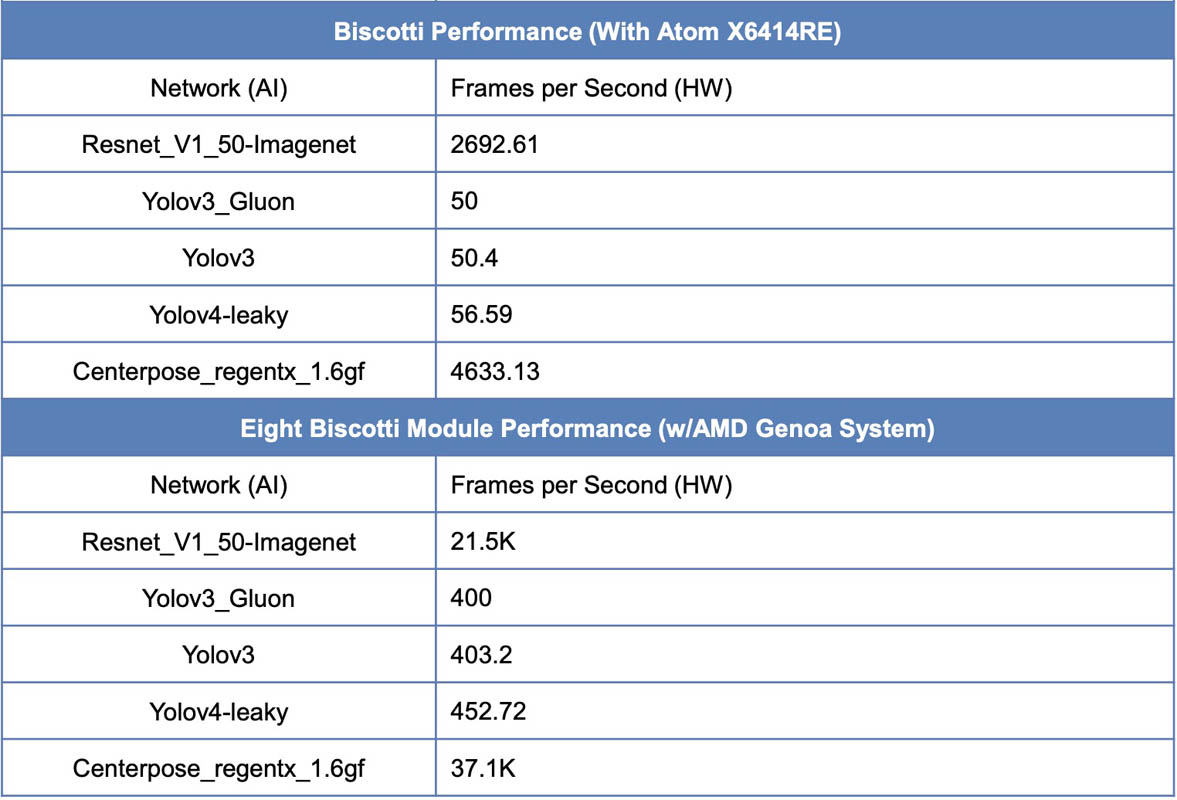

In terms of performance, here is what we found using the cards.

The server being shown is an AIC EB202 model with the E1.S expansion tray. The AIC EB202, we first covered at SC22 but it has a really cool feature where the drive cage can be swapped. AIC has various options such as E3.S as well as E1.S.

The entire AIC platform is made for edge deployments, so using the Biscotti E1.S seems to be a way to add lower-cost and lower-power AI acceleration to edge servers than using GPUs.

Final Words

This was just something cool that the team saw in Taipei earlier this month and that we wanted to share. It will be interesting to see how edge servers evolve. Some think that AI acceleration onboard CPUs via features like Intel AMX or NPUs will cover a lot of the market. Some think that GPUs like the NVIDIA L4 will be needed. Yet others seem to think that cards with arrays of low-power TPUs will be the way forward. Our sense is that it is probably a mix of all of those solutions depending on the power and performance profile that folks are trying to hit.