Summary

This whitepaper explores the concept of developing data centers that are solely focused on AI inference. Up to 90% of AI operations are inference vs 10% training . Training requires specialized processing to create the neural networks that are then used for inference operations. Training is the primary driver for the power requirements mentioned by the IEA above. On the flipside, inference can be done much more power efficiently. The benefits on developing inference-only datacenters can be significant:

- Reduced initial cost for inference servers compared with training servers

- Reduced Total Cost of Ownership (TCO) over the lifetime for inference servers

- Inference servers with TPUs can be air-cooled, avoiding expensive and difficult to deploy liquid cooling schemes

- Data centers with air-cooled servers use far less resources, reducing strain on local power and water

The sections below will compare the different requirements for cooling, electrical systems, HVAC, power and the infrastructure between training servers and inference servers.

The Problem

Training vs. Inference

Training requires semiconductors with floating-point units (FPUs). These are used for complex calculations that take many clock cycles to complete. GPUs were originally used for calculating graphics shaders and then later they were used for general purpose GPU functionality. GPUs are more specialized than CPUs but are fairly large logical blocks that use a significant amount of energy. In contrast, inference requires integer 8 calculations. These are far simpler and extremely repetitive Tensor/Matrix multiplications that can be performed by logical blocks designed specifically for the operation that takes place in very few clock cycles.

Tensor Processing for inference is more efficient per clock cycle vs. GPU or CPU Processing

Image Source: Opengenus Foundation

Training is used to create a neural network. Then, once it has been created, inference is used for all subsequent tasks (e.g. a model for identifying cars or a Large Language Model (LLM) for handling records in an accounting office). A GPU that is used for training can have over two thousand shader units/FPUs. Tensor Processing Units (also called neuromorphic units, AI Accelerators, etc.) can have 10k modules or greater available for matrix multiplication. Current TPUs have performance of up to 50 trillion operations per second (TOPS) with 12 TOPS/Watt. Current GPUs (although required for training) can be less efficient at inference tasks and may only provide 1 to 2 TOPS/Watt, requiring 6 to 12 times as much power to achieve the same inference performance.

Cooling and Power

Die Cooling

Due to the increased power usage of GPUs during both training and inference operations, they require an extraordinary amount of cooling to remain at operational temperatures. Expending 250 Watts of heat from a 20x20mm die area is a difficult technical problem to solve and generally requires some kind of liquid cooling (or even immersion cooling).

Image Source: AnandTech

Conversely, inference-only silicon may expend only 4 or 5 Watts from a 5x5mm die area due to lower frequencies. With only 2% of the power being used in a quarter of the die area, the heat per square millimeter is significantly lower and can be reduced further by using conventional air-cooled heat sinks.

Servers/Racks

Current training servers using GPUs may need to cool up to 8 GPUs at 700 Watts/GPU for a modern enterprise GPU built for general purpose operation in a 4U server chassis. At 5600 Watts for the GPUs and an additional amount for the CPUs and memory, this can add up to 8KW/server. In a 48U rack configuration, that amounts to 96K Watts/racking unit for 12 servers. The chilled liquid is pumped through the racks and servers and then it is sent to refrigerated chillers to be recirculated. The chillers use either evaporated water from the infrastructure or specific equipment that also needs to be cooled to operate efficiently, providing 192KTOPS/rack. All of this requires custom heat sinks, custom cooling liquid, custom motherboards, and custom dual power for the racks since it exceeds the normal budget of 65KW for a 48U racking unit.

An inference server with 64 TPUs uses up to 250 Watts for up to 3200 TOPS (configuration with Unigen Biscotti AI modules). That heat is spread out over the entire width of a 1U or 2U server and can be cooled efficiently with laminar flow fans blowing air over E1.S or E3.S enclosures. These can be arranged in either hot or cold containment aisle arrangements, with the heat managed through returns to simpler air conditioning or less costly evaporative cooling units. Using less than 750 Watts per server, a 48U rack would use less than 35KW for 153KTOPS. At well under 65KW, this setup can use standard power and does not require any custom server hardware, significantly reducing overall costs.

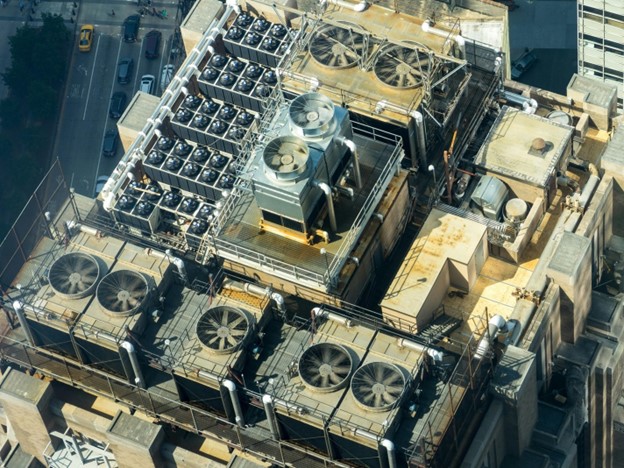

Heating, Ventilation and Air Conditioning (HVAC)

Once the heat has been drawn away from the training servers and racks, the liquid or air needs to be cooled. With liquid cooling, a data center requires advanced piping and pumps to bring the heated liquid to heat exchangers in the HVAC cooling systems, which then need to be cooled using evaporative cooling or refrigeration. Standard air-cooling can use vents and returns to channel heated air to standard air conditioners at a significantly reduced capital expense. (source)

Image Source: Western Cooling Efficiency Center, UC Davis

Image Source: Medium

Cost

By using standard off-the-shelf commodity servers built by high-quality and high-volume manufacturers, the cost of the AI server hardware can be lowered dramatically. The main components of a storage server and an AI inference server are nearly the same. They both use a standard server motherboard, standard server CPUs/heatsinks (AMD or Intel) with 16 cores, standard DDR5 RDIMMs, a standard 1U or 2U chassis with high-quality, lower-power (500 Watt) power supplies, and cables/interfaces/backplanes for Open Compute Project EDSFF (E1.S or E3.S) 4-lane PCIe storage. The only difference for the AI servers is that the SSDs are swapped out for AI modules using the identical 9.5mm E1.S or 7.5mm E3.S enclosures. All of these are cooled by standard fans. Since all these components are standard and produced in large volumes for storage and other segments, costs can be greatly reduced. For example, the chassis is less expensive because the machines that cut and bend the metal can be amortized over a large quantity, making each one less expensive. The same is true for many of the other components.

In comparison, a GPU server will use custom chassis, kilowatt power supplies, custom heatsinks for liquid-cooled processors, and sometimes immersion tanks. Additionally, the cost of GPUs and extra DRAM memory needed to support each of the GPUs increases the overall cost. To be able to host these GPUs, custom motherboards, special power cables, and interfaces capable of hosting the extra power are required. Since most of these components are custom built specifically for individual server types, they cannot interchange components with high volume servers. This adds dramatically to both the cost and the lead time to deliver the servers.

The last element of hardware cost is the replacement parts that may be required. Custom GPU servers do not use off-the-shelf components which are readily stocked and available. If a component fails, it must be ordered and may need to be built as a one-off, which would be time consuming and very expensive.

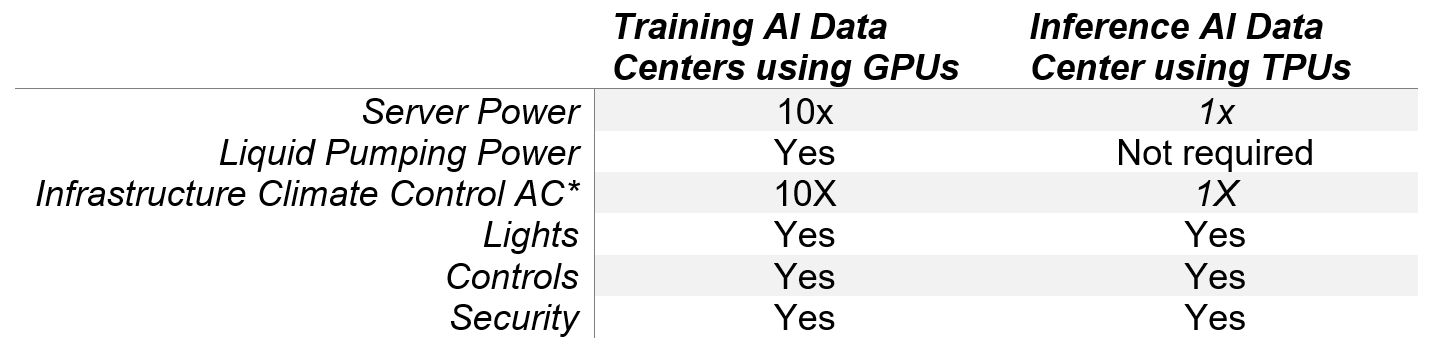

Data Center Power Footprint

The overall power consumed by a data center using inference only servers vs. training servers can be looked at by combining the following:

*Hot or Cold Aisle Containment and Vent/Returns

A training server data center may require up to five times the amount of power, and thereby requires significantly more infrastructure for liquid cooling, HVAC, and power lines. Additionally, training servers need up to five times more backup power to keep it running in the case of power failure. Not only is this more expensive in terms of operating cost, but other utilities are involved such as higher water usage and transformers to deliver the required current.

Image Source: Data Center Frontier

This may be acceptable for a custom built facility, but for smaller colocation data centers, and especially for on premises usage in a corporate facility’s data center room, it can be a showstopper.

Infrastructure (Weight, Financing, Location)

By reducing the total cooling required by switching designs from a training data center with liquid cooled GPU servers to an inference data center with air-cooled TPUs, a set of additional savings can occur.

First, the weight that the racks need to support is reduced, lowering the cost of the racks themselves. The weight-bearing floor infrastructure can also be reduced in size and mechanical strength because the weight of both the servers and the supporting racking structures has been lessened.

Second, the complexity of the piping for the chilled liquid is eliminated. This piping is both costly to purchase and to maintain, not to mention that it requires extra space to route through the data center.

Third, finding a suitable location for the data center becomes easier. The power requirements from the local utilities will decrease by up to 80% and the strain on the power grid will be significantly reduced.

Finally, the backup power supplies that are required can be up to 80% less, both in immediate battery power and generators that come online in the case of local power failure due to weather or maintenance issues.

Conclusion

The benefits of developing inference-only data centers can be significant through the reduced initial cost when compared to training, as well as the reduced Total Cost of Ownership (TCO) over time. There is also significant savings to the environment since inference servers with TPUs can be air-cooled thereby consuming far less resources, reducing the strain on local power and water.

There is no doubt that Artificial Intelligence will continue to grow at an exponential rate as corporations and humans find new beneficial use cases across all market segments. The physical, environmental and financial footprint of deploying inference-only data centers provides an opportunity to keep up with this growth in a responsible and sustainable way.

About Unigen

Unigen, founded in 1991, is an established global leader in the design and manufacture of original and custom SSD, DRAM, NVDIMM modules and Enterprise IO solutions. Headquartered in Newark, California, the company operates state of the art manufacturing facilities (ISO-9001/14001/13485 and IATF 16949) in Silicon Valley and Hanoi, Vietnam, along with 5 additional engineering and support facilities located around the globe. Unigen markets its products to both enterprise and client OEMs worldwide focused on embedded, industrial, networking, server, telecommunications, imaging, automotive and medical device industries. Unigen also offers best in class electronics manufacturing services (EMS), including new product introduction and volume production, supply chain management, assembly & test, TaaS (Test-as-a-Service) and post-sales support. Learn more about Unigen’s products and services at unigen.com.

What is an E1.S/E3.S?

E1.S and E3.S are mechanical electrical standards created by the Open Compute Project committee. They can both interface directly with a server’s PCIe bus through a 4-lane connector. An E3.S has the option of using an 8-lane connector, which draws up to 20 Watts. They were initially intended for use of SSD storage enterprise technology with the integration of storage controllers, NAND, DRAM and power loss protection (PLP) circuitry. Although originally intended for storage, the standard lends itself directly to any technology that can use the PCIe bus. This includes AI, networking, and security processors. The website for the Open Compute Project can be found here.

Upgrades?

One of the side benefits of using the E1.S or E3.S is the easy path to upgrades and maintenance. This standard allows for hot swap/plugging in of modules so if required, a module can be directly removed, replaced, and even upgraded without stopping the operation of a server if the drivers for the new module have been previously installed. This is simply not possible for liquid-cooled modules without significant custom design. Also, since most GPU add-in-cards use PCIe connectors (x8 or x16) directly on the motherboard, these are not plug ‘n play sockets that would allow removal without shutting off the server’s power.

Glossary of Terms:

- CPU: Central Processing Unit (sometimes referred to as Intel x86)

- GPU: Graphics Processing Unit. First used to describe a processor with multiple Floating Point Units for processing transform and lighting algorithms, later referred to as shader units.

- TPU: Tensor Processing Unit

- Tensor Calculations: Matrix multiplication, usually using Integer 8 processing or Integer 4 for large language models.

- Inference AI: In the field of artificial intelligence (AI), inference is the process that a trained machine learning model uses to draw conclusions from new data.

- Training AI: AI training is the process that enables AI models to make accurate inferences using large data sets (either language or images).

- Server: A large enterprise computer capable of running complex calculations and accessed by multiple individuals concurrently.

- Racking Units: A cabinet or vertical metal structure used to hold servers with capabilities for delivering power, cooling, and networking.

- Data Center: A single function building constructed to house many servers fitted into racking units. A small data center might house 100 or 200 servers. A very large data center may house 100,000 servers in a single massive building.

- Colocation Data Center: A custom designed data center where space, power, and networking are rented to corporations who wish to house their individual or multiple racks without having to maintain a space themselves. They are also used by smaller companies or companies who need a smaller number of servers located closer to their users.

- HVAC: Heating, Ventilation, and Air Conditioning

- Plug n’ Play: The ability to plug a module into a computer and have it be instantly recognized by the computer without the need for key strokes.

- Hot Swap: The ability to both remove a module or insert a module into a computer that is powered on, without first turning off and removing the computer from a racking unit. Without this hot-swap ability, either removing or inserting the module could damage both the module and the computer through a short circuit.

- EDSFF: The Enterprise and Data Center Standard Form Factor (EDSFF), previously known as the Enterprise and Data Center SSD Form Factor, is a family of solid-state drive (SSD) form factors for use in data center servers.

- Neural Network: A neural network is a method in artificial intelligence that teaches computers to process data in a way that is inspired by the human brain. It is a type of machine learning process, called deep learning, that uses interconnected nodes or neurons in a layered structure that resembles the human brain.

- TCO: Total Cost of Ownership (TCO) can be calculated as the initial purchase price plus costs of operation across the asset’s lifespan.